The launch of the Apple Vision Pro at the beginning of 2024 marked a significant milestone in the world of augmented reality and mixed reality.

As Apple pushes the boundaries of immersive technology with its groundbreaking headset, developers are presented with an exciting yet challenging opportunity to create apps that are able to take full advantage of this new device.

However, Apple Vision Pro app development comes with a unique set of hurdles.

In this blog post, we will explore:

- The current interest of developers

- Key development challenges that our developers at GIANTY have encountered while creating several apps for the Vision Pro and discuss strategies for overcoming them:

Interest from Developers in Apple Vision Pro App Development

From adapting to a new spatial computing interface to managing performance constraints and optimizing for a completely new user experience, developers face a steep learning curve.

Due to these significant development challenges, many app developers have shown little interest in building applications for the Apple Vision Pro, discouraged from prioritizing Vision Pro in their roadmaps.

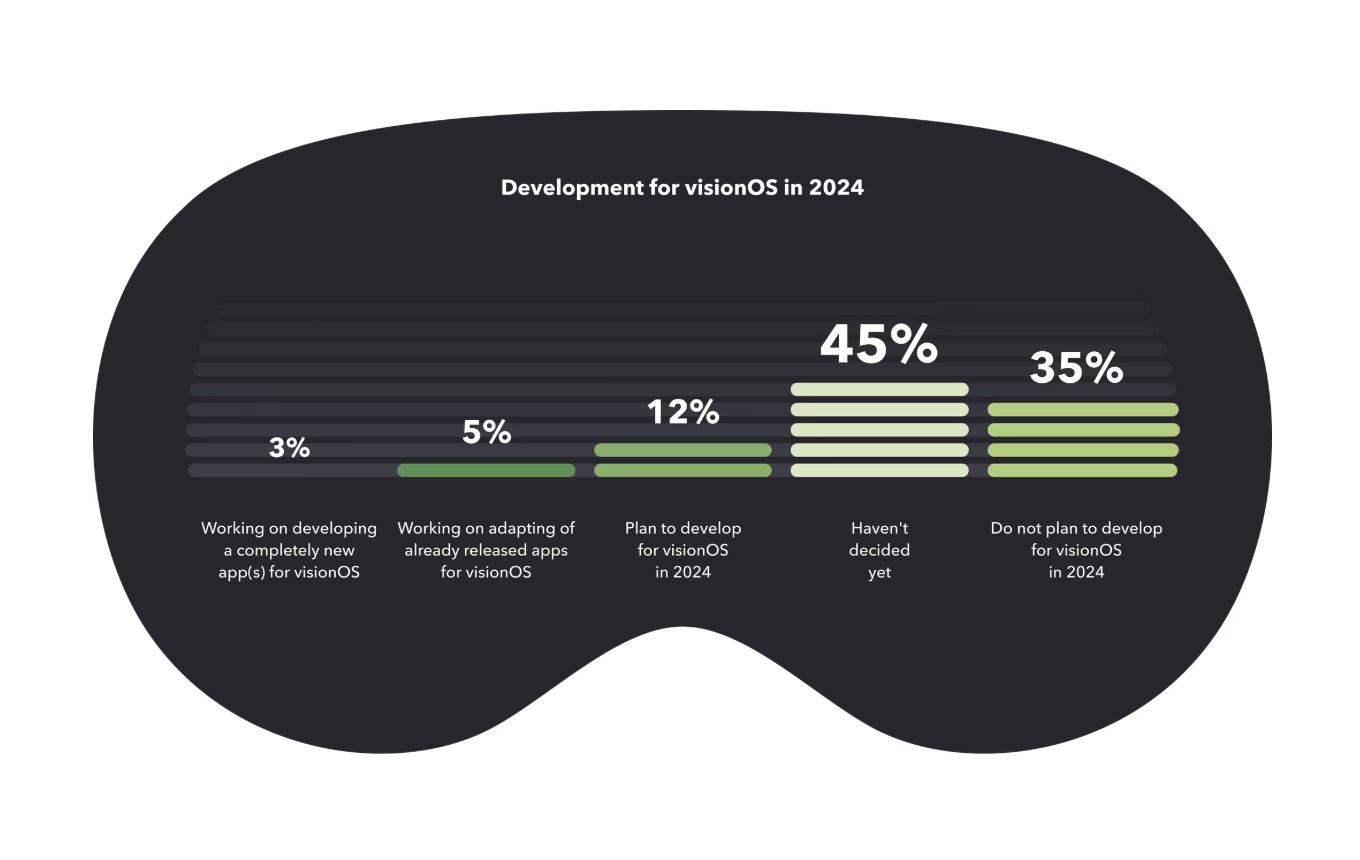

A survey conducted by Wccftech reveals that the majority of developers, with 45%, remain uncertain about supporting the Apple Vision Pro, while 35% of the participants have decided against Apple Vision Pro app development as a whole.

GIANTY counts itself among the innovators who see the immense potential that Apple Vision Pro app development offers.

We are no strangers to overcoming the complexities of cutting-edge technology, and our work with integrating our AI chatbot —ChatGIANTY into the Apple Vision Pro as a virtual assistant is a testament to our attempt to embrace new challenges.

Not only that but GIANTY is currently working on a soon-to-be-released app specifically developed for the Vision Pro, perfect for those who are creatively inclined towards graphic design with media editing and 3D modeling being a few of the available features.

We are determined to continue crafting immersive experiences for this powerful platform, taking on the development challenges with enthusiasm and expertise.

Key challenges in Apple Vision Pro development

Challenge 1: New Technology Means Longer Development Time

One of the most immediate hurdles of Apple Vision Pro app development is the longer development time required to understand and integrate the device’s technology.

1. Learning the Technology

Developing for the Apple Vision Pro requires a deep understanding of features like face tracking, spatial audio, and immersive visuals to ensure successful implementation.

That’s why developers must spend a lot of time learning how Apple Vision Pro’s features work in unison, as they not only affect how apps are developed but also shape how users will interact with the app.

To help with the process, they can engage with online communities and participate in developer forums. These platforms provide valuable insights, troubleshooting tips and best practices from other developers facing similar challenges.

Another effective approach is building small prototypes to experiment with these new technologies. This hands-on approach allows developers to test, iterate and better understand how these features integrate into their apps. Prototyping not only accelerates learning but also helps refine app functionality and user experience during the early stages of development.

2. Unfamiliarity Invites Bugs

The relative newness of the platform means the software development tools for the Apple Vision Pro aren’t as stable as those of Apple’s more established products, making developers more prone to encountering errors during the development process than on other platforms.

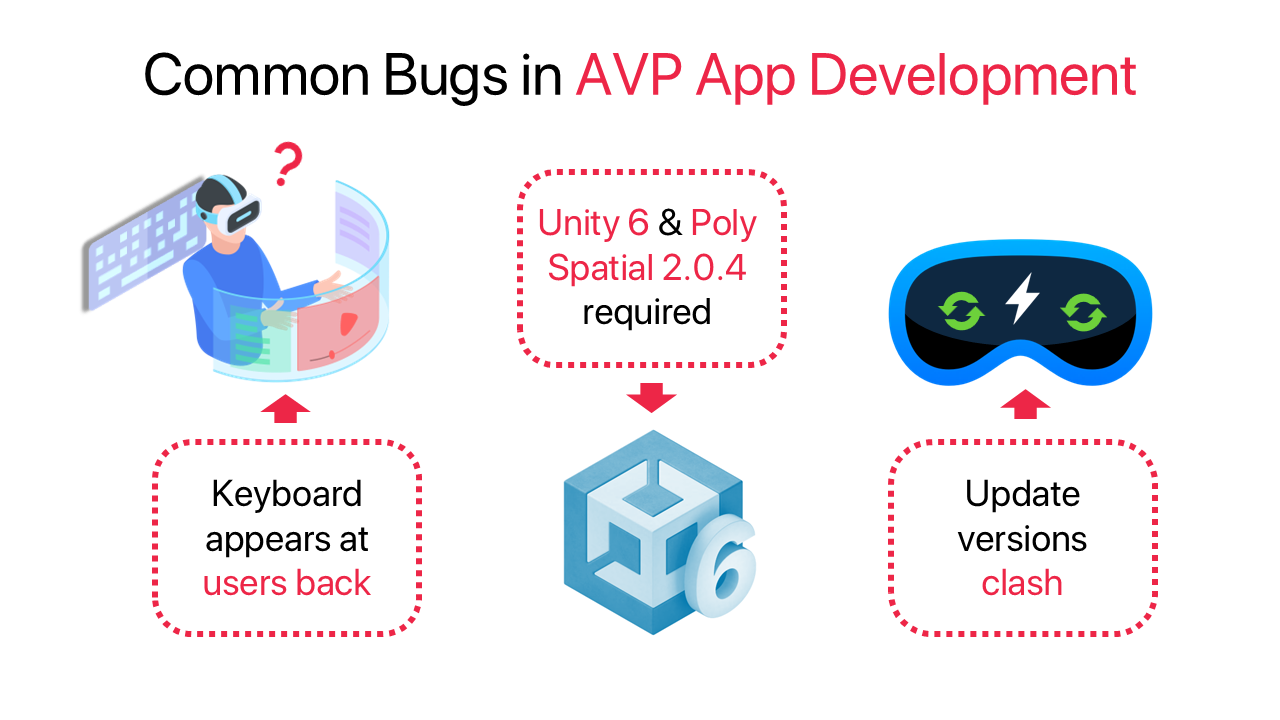

Our developers at GIANTY have noticed a few common issues:

- Apple’s default keyboard is always positioned behind the user’s back. Since the keyboard is not fixed, developers have spent approximately 3 and a half days creating custom keyboards as a workaround for this issue.

- Another example involves the use of Unity. When using a version slower than Unity 6 with full space permission, users are unable to see their hands if covered by the UI. To address this, 3 days were needed for our developers to upgrade to Unity 6 and Poly Spatial 2.0.4 and fix the bugs.

- While regular updates of the platform might fix many of these issues, regular update versions tend to conflict at times, causing more issues that need debugging.

Development teams need to be cautious during the testing and debugging process, ensuring they allocate sufficient time required for it.

3. Adapting to Unique Features

The Vision Pro’s unique and advanced features offer exciting possibilities but also require developers to rethink how users interact with their apps.

For example, the device introduces us to two tracking features that open up new ways for users to interact with maps, requiring developers to rethink traditional input methods:

Indirect Tracking:

The Apple Vision Pro allows users to interact with objects by simply looking at them thanks to advanced eye-tracking technology. A white dot appears to let users know where the user’s gaze is focused, helping them to identify the selected object.

- Pros: Low effort, as the user only needs to look at an object in order to interact with it regardless of its distance

- Cons: The white dot is small and the outline of things that are selected is very faint, making it difficult to see if an object has been really selected

Direct Tracking:

Users are able to interact directly with objects using their hands, offering an immersive experience.

- Pros: Users will experience a more realistic and intuitive interaction due to the hands-on approach, especially because objects in the virtual environment are mostly made in 3D

- Cons: If the objects are too small or positioned too close to one another, selecting them accurately becomes challenging.

Developers need to understand when and how to correctly apply each of these two tracking methods. While Direct Tracking is better suited for scenarios where users want a more real interaction that involves touching, Indirect Tracking could be applied more broadly, especially for CTAs and Triggered Objects.

Along with the challenge of adjusting to these new input methods, developers must rework existing controls.

For applications previously developed for mobile or desktop devices, a simple port to the Vision Pro is insufficient.Developers must consider how users will interact with the virtual environment and in many cases, they’ll need to design entirely new controls that allow for seamless navigation and interaction within this space, responding to eye movements, hand gestures, and even facial expressions.

Challenge 2: Complex User Interface Design

Designing a UI for mixed reality requires more than just adapting to new input methods. Developers must design UIs that seamlessly integrate with virtual environments, creating an intuitive experience that users can easily navigate.

This presents several challenges:

- Viewing a spatial environment using the headset can sometimes induce cybersickness or difficulty maintaining concentration. To address this, developers often design user experiences or interaction flows with a duration of around 15 minutes to ensure comfort and engagement.

- The usage of excessive lighting effects, such as holos or a glow, can make the UI appear blurry and brokenwhen viewed at a close range from various angles, so it is best to minimize the use of such effects.

Exporting a Design

Key Considerations for High-Quality Design Exports:

- Separate Backgrounds and Content: Export backgrounds and content elements as separate files for flexibility and easier editing.

- Isolate Text: Treat text as a distinct element and export it separately from other design components.

- Optimize Resolution:

- Adjust Resolution: Increase the resolution during export for sharper visuals, especially when dealing with high-resolution displays.

- Consider 4x Rule: For the highest quality, export at four times the desired resolution (e.g., if the final output is 1080p, export at 4K). This technique is particularly useful for designs intended for high-resolution displays or potential future scaling needs.

2D or 3D UI

Understanding the distinction between 2D and 3D UIs is crucial for effective mixed reality design:

- 2D UIs: While simpler to implement, they can appear unnatural and jarring in 3D environments, especially when viewed from different angles

- 3D UIs: Offer a more immersive experience but can increase development complexity and potentially impact performance.

The UI Should Feel Natural and Immersive

To achieve that, developers must ensure the UI adapts to the spatial context in which it is used, carefully considering the layout and positioning of elements within the virtual environment.

It is also beneficial to avoid overcomplicating the UI. By keeping interactions simple and minimizing cognitive load, users can more easily navigate and interact with the interface.

Finally, utilizing spatial cues can effectively guide users’ interactions naturally within the 3D space.

Challenge 3: Optimizing Mixed Reality Performance

Vision Pro applications must prioritize smooth performance within virtual environments. Developers need to ensure high frame rates and efficient use of processing power to minimize lag or jitter, which can significantly disrupt the immersive experience.

- Real-Time 3D Rendering and Mapping: To create truly immersive mixed-reality experiences on Apple Vision Pro, real-time 3D rendering and environment mapping are crucial. However, achieving this requires careful optimization to ensure smooth performance while maintaining high visual fidelity.

- Maximizing Potential: To fully leverage the Vision Pro’s capabilities, developers must master 3D modeling techniques, integrate spatial audio, and utilize advanced media formats. Striking a balance between these complex features and maintaining optimal performance is crucial for delivering a high-quality user experience.

- Prioritizing User Comfort: While striving for a visually rich experience, developers must prioritize user comfort. Excessive visual or auditory stimulation can lead to fatigue, motion sickness, or even discomfort. It’s crucial to create an immersive experience that is engaging without being overwhelming.

To ensure a decent performance without negatively affecting user experience, consider these guidelines:

- Target Frame Rate: Aim for a consistent 90 frames per second (FPS)

- Tris Limit: Keep the total triangle count for an entire game below 500,000 to reduce the rendering load on the GPU

- Batch Limit: Maintain a batch count between 300 and 350. Batching multiple objects together for rendering can significantly improve performance.

- Lighting: Use Unlit whenever possible to simplify lighting calculations. While Lit can create more realistic lighting effects, they can also increase rendering overhead.

- Post-Processing: Avoid or minimize the use of post-processing effects like bloom, depth of field, and motion blur. These effects can be computationally expensive.

- Transparency: Limit the use of transparent materials and effects. Transparency can increase draw calls and overdraw, leading to performance issues.

Challenge 4: Limited Technological Capacity

1. Shader Graph

Custom shader development for the Apple Vision Pro is currently limited to the default Unity URP shader within Shader Graph, restricting access to High-Level Shader Language (HLSL) and potentially limiting the creation of more advanced or tailored shaders.

This can pose challenges, particularly when integrating depth texture nodes, which often requires significant manual customization within Shader Graph.

To overcome these limitations, developers can explore options like creating custom Shader Graph nodes and leveraging Unity URP extensions. Additionally, future updates from Apple or Unity may introduce greater flexibility and performance improvements for shader development on the Vision Pro platform.

2. VFX Implementation

VFX development for the Apple Vision Pro currently faces limitations due to the rendering system. The billboard rendering system, which is commonly used for 2D effects, cannot effectively rotate with the user’s view, necessitating the use of volumetric particles for more dynamic visual effects.

Furthermore, not all particle system features are fully supported on the Vision Pro, making it challenging to implement complex VFX. This requires developers to use the Scene Validation tool to check each particle system for compatibility and identify any potential issues.

These limitations can present challenges for developers aiming to achieve visually rich and immersive experiences on the Vision Pro platform.

3. AR Plan Detection

The Apple Vision Pro’s current AR capabilities present some limitations. Plane detection is primarily limited to vertical and horizontal surfaces, which can restrict interaction possibilities and limit the range of real-world scenarios that can be effectively augmented.

While object detection and classification are functional, they can exhibit instability, making them unreliable for production-level applications that require consistent and accurate object recognition.

To address these limitations, developers can explore strategies such as developing and integrating custom algorithms for plane and object detection to enhance accuracy and expand the range of detectable surfaces and objects. Rigorous testing and continuous refinement of existing AR features can also help improve stability and reliability over time.

4. Hand Detection

The Apple Vision Pro’s hand detection system currently exhibits some limitations. For instance, it can struggle to accurately track rapid hand movements, leading to potential delays or inaccuracies in gesture recognition.

Additionally, the system may have difficulty recognizing hand gestures when fingers are obscured, such as when the hand is facing away from the user.

These limitations can hinder the system’s responsiveness and accuracy in certain scenarios, ultimately affecting the overall user experience.

5. Battery Life

The Apple Vision Pro is powered by an external battery pack with an optional tether for extended use beyond its two-hour charge limit.

Given its immersive design, optimizing apps for power efficiency is paramount. High power consumption, driven by its display, sensors, and real-time processing, can rapidly deplete the battery.

Developers can optimize performance by reducing graphics-intensive tasks, lowering resolution for non-critical visuals, and minimizing background processes. Monitoring battery usage provides valuable insights, enabling developers to identify power-consuming features and optimize code accordingly.

Challenge 5: Smaller audience

The Apple Vision Pro, while an innovative product, currently targets a niche audience.

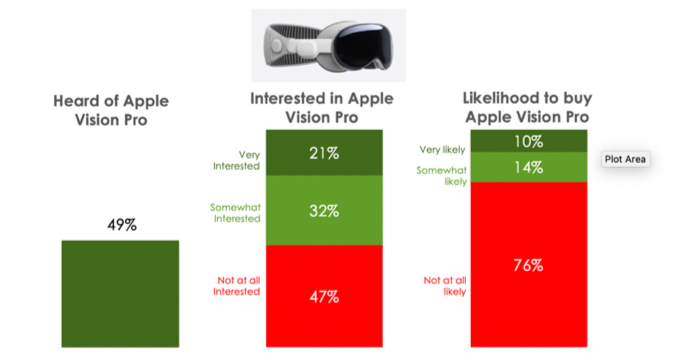

Hub Entertainment Research’s “Connected Home” study, surveying 5,026 US consumers, revealed that while awareness is moderate (49%), only 10% are likely to purchase it.

This suggests a smaller initial market compared to other Apple devices, primarily driven by early adopters and loyal Apple enthusiasts.

While interest in XR headsets is growing, consumer focus largely leans towards gaming experiences. Conversely, the Vision Pro’s initial focus appears to be on productivity, creative industries, and immersive experiences.

Developers can effectively navigate this landscape by aligning their app development with the Vision Pro’s strengths. Focusing on productivity tools, immersive media experiences, or innovative educational apps can help developers cater to the initial, albeit niche, audience and establish a strong foothold within the Vision Pro ecosystem.

Challenge 6: Originality

To truly capitalize on the Vision Pro’s unique capabilities, developers must move beyond replicating existing app formats from other platforms. Instead, they should focus on creating original experiences that leverage the device’s unique features in innovative ways.

Apps need to offer something truly special, something that users cannot easily find on other devices.

This could involve pioneering new forms of immersive gaming, creating interactive works of art, or developing next-generation productivity tools that redefine how we interact with digital spaces. Ultimately, developers must create applications that provide significant value to users and justify the Vision Pro’s premium price tag.

High Risk, High Reward

The primary development challenges associated with Apple Vision Pro app development stem from the novelty of the technology itself. As a relatively new product, developers are still in the process of familiarizing themselves with its capabilities and limitations.

This inherent uncertainty, coupled with the uncertainty surrounding consumer adoption, can discourage developers from investing significant time, effort, and resources into app development for an unproven market.

However, these challenges should not be viewed as insurmountable obstacles.

Apple boasts a loyal consumer base, and as the Vision Pro ecosystem matures and gains traction, there will be a growing demand for innovative applications that fully leverage its unique features.

This presents a significant opportunity for developers to capitalize on this emerging market and create groundbreaking user experiences.

Developers, like GIANTY’s, who are willing to invest in understanding and navigating the steep learning curve of Apple Vision Pro app development will be well-positioned to capitalize on a highly specialized, yet enthusiastic, market.

The Vision Pro’s potential to revolutionize industries such as productivity, entertainment, and education, coupled with its ability to create truly immersive experiences, suggests that it will likely become an essential tool for professionals and creatives in the long term.

By remaining committed to mastering this new technology and focusing on delivering value through original and innovative applications, developers can play a crucial role in shaping the future of spatial computing and mixed-reality experiences.